Generative artificial intelligence (AI) has entered the mainstream. The technology harnesses the power of machine learning by training software to study patterns in data and using the acquired knowledge to generate new content in response to human input. The technology has led to exciting possibilities for enhancing creativity, from generating logos and social media postings to producing new software, poetry, musical compositions, and artwork. But it has not done so without controversy.

Earlier this year, stock-image aggregator Getty Images and a trio of artists separately sued London start-up Stability AI for copyright infringement based on its “Stable Diffusion” technology.1 Stable Diffusion is an open-source AI art generator that creates photo-realistic images in response to users’ text prompts.2 It does so by “training” itself to identify relationships between objects and text using billions of digital images scraped from the web. In a sense, this process is similar to an experienced artist using her decades’ worth of experience to create customized paintings. The difference is that Stable Diffusion’s training is carried out more quickly and on a much more massive scale.

The problem, according to the plaintiffs, is that Stability AI does not license any of the images it uses to train the AI, many of which are protected by copyright. The plaintiff artists insist that some of the generated images are too similar in appearance to the copyrighted images on which the AI is trained.

Stability AI (and its co-defendants) have sought to dismiss the lawsuits. But, if the cases are allowed to proceed, courts will have to address novel questions of copyright law, including whether the enigmatic doctrine of “fair use” applies to AI technology. They will have to do so, moreover, against a recently altered legal landscape, thanks to the Supreme Court’s Warhol ruling issued earlier this year. There, the Court held that the commercial licensing of Andy Warhol’s Orange Prince silkscreen portrait for use on a magazine cover did not constitute transformative use of a copyrighted photo of music artist Prince. The Court reached this conclusion even though the portrait imbued the original photo with new expression, purportedly to convey the dehumanizing nature of celebrity. In so holding, the Court appeared to cabin transformative uses to those that do not serve substantially the same purpose as the originals.3

As explained below, Warhol may have a significant effect on copyright liability in the generative-AI context, and more immediately on the copyright suits involving Stability AI.

The Stable Diffusion Technology: A “21st-Century Collage Tool” or a Sophisticated System for Creating New Works of Art from Scratch?

The plaintiff artists describe Stable Diffusion as a “21st-century collage tool” analogous to an Internet search engine that “looks up” a user’s query in a “massive database” and patches together bits and pieces of digital images that hit on the query.4 But that analogy has drawn criticism as overly simplistic and inaccurate. In reality, Stable Diffusion uses machine learning to create images from scratch.

At a high level, the image-generation process boils down to three steps.5 The first is the “input step” in which the system amasses as much source data as possible to train the AI. Stable Diffusion gets its training data from a collection of five billion publicly available images (and their accompanying captions, annotations, and metadata) scraped from the web and indexed by German nonprofit Large-Scale Artificial Intelligence Open Network (LAION). The plaintiffs allege that these training image-text pairings are ingested by Stable Diffusion and loaded into computer memory, but it is unclear whether copies of the images are made at this stage (and, if so, where they are saved). What is clear, though, is that the training images are not stored in the Stable Diffusion software itself, which is too small to contain copies (even compressed copies) of such images.

The second step is the “training step.” The system first encodes the training images and accompanying text by converting them into mathematical representations called “latent” representations. One can think of these representations as giant arrays of numbers, with the values and positions of the numbers defining various aspects of the images. These encoded images take up less space than the originals and result in faster processing. Then, having encoded the image-text pairs, the system iteratively tweaks the data by gradually adding “noise” until the images become distorted and are no longer recognizable (a process known as “diffusion”).6 Once a set of diffused images is created, the system then reverses this process, iteratively learning to remove the noise until the original images are reconstructed and matched to their corresponding text. At first, the system does a poor job of this. But by repeating the process many times on billions of images, the AI learns how to identify specific objects, lines, colors, shades, and other qualities from random noise—or, stated differently, to create an image containing such attributes.

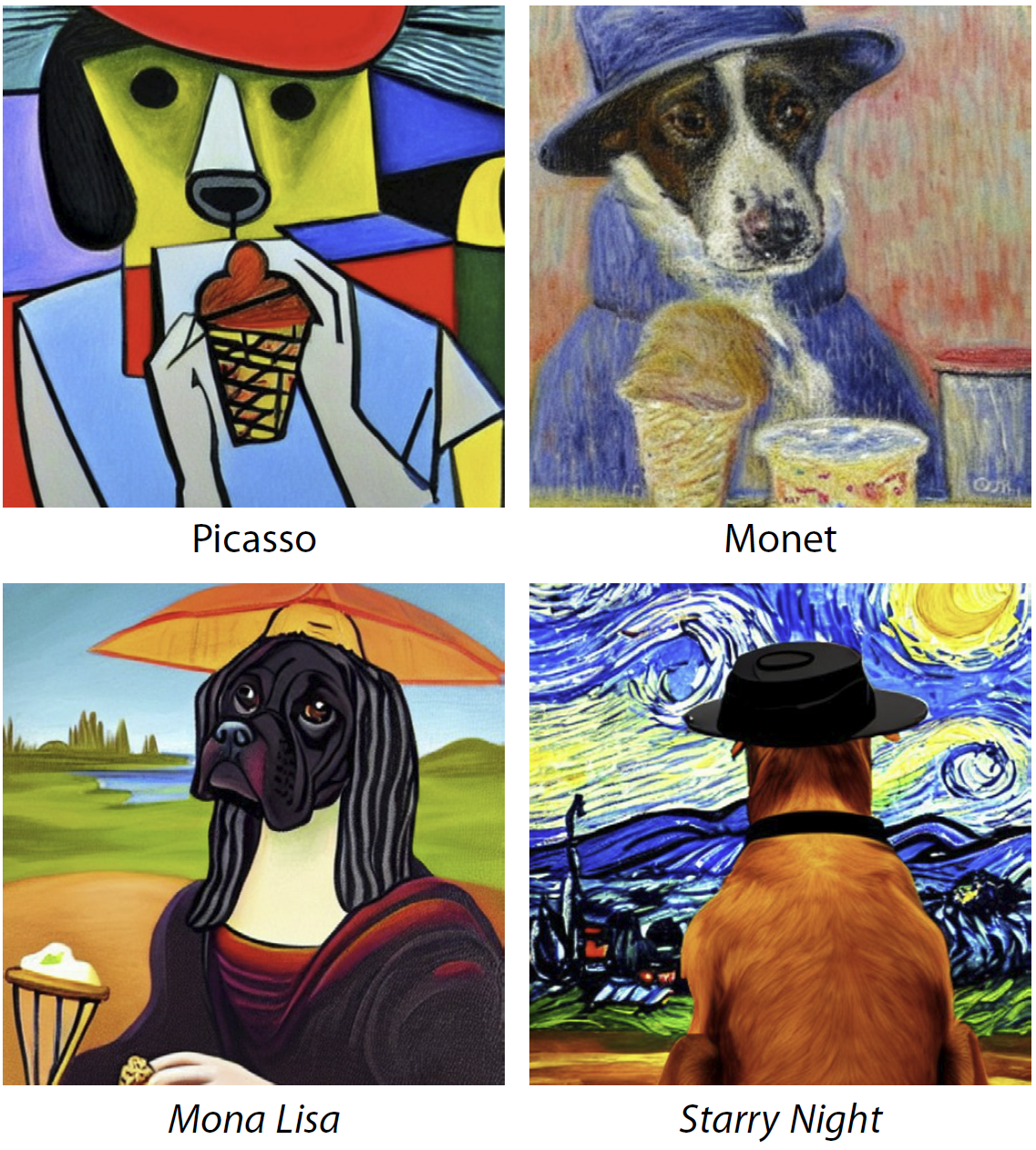

The final step is the “output step.” When a user enters a text prompt, the system converts the text into a latent representation. Then, beginning with a latent representation of a random diffused image, the system uses its training to determine how to successively remove noise so as to reveal an image “conditioned” by the user’s prompt; that is, containing the attributes referenced in the prompt (much like a sculptor chiseling away at a block of marble to create a statue matching a customer’s request). The resulting latent representation is then converted to a visual image that is displayed to the user, within seconds of the user inputting her prompt. For example, the text prompt “water color painting of contemplative dog wearing a hat and eating ice cream in the rain” produced the images in Figure 1.

Importantly, the system is designed so that no particular training image has an undue influence on the AI. In one study, researchers intentionally tried to recreate particular images and were successful just 0.03% of the time.7 Nevertheless, users can request Stable Diffusion to generate an image “in the style” of a particular artist or work, which will cause the system to emulate features derived from that artist’s work(s).8 Examples of this type of output are shown in Figure 2.

Copyright Infringement and Fair Use as Applied to Stable Diffusion

The purpose of copyright law is to encourage creators to create so as to “enrich[] the general public through access to creative works.”9 The law achieves this purpose by striking a balance between “rewarding authors’ creations while also enabling others to build on that work.”10

To that end, copyright law allows creators to block others from reproducing and preparing variations of their copyrighted works (called “derivative works”) that are “substantially similar.” But it also limits creators’ rights to control their works. For example, general ideas such as motifs, genres, stereotypes, and tropes are not protectable—only specific expressions and arrangements of those ideas can be copyrighted.11 Further, the doctrine of “fair use” excuses certain types of copying that would otherwise constitute infringement. In effect, the doctrine provides “breathing space” for certain infringing uses such as criticism, commentary, news reporting, teaching, scholarship, and the like.12 To determine whether a particular infringing use qualifies as fair use, courts assess the following list of nonexhaustive factors:

- the purpose and character of the use, including whether such use is of a commercial nature or is for nonprofit educational purposes;

- the nature of the copyrighted work;

- the amount and substantiality of the portion used in relation to the copyrighted work as a whole;

- and the effect of the use upon the potential market for or value of the copyrighted work.13

In weighing these factors, courts “must keep in mind the public policy” underlying copyright law and determine whether permitting the use to continue is likely to “stimulate artistic creativity for the general public good.”14

Does Stable Diffusion Infringe Copyrighted Training Images?

While much attention has been given to whether Stable Diffusion’s use of training images qualifies as fair use, the antecedent question is whether such use infringes in the first place. There are three potential acts of infringement that could be relevant:

- Ingesting copyrighted images for subsequent use in training the AI (input step);

- Making intermediate copies of the images during training (training step); and

- Generating new images based on the training and users’ text prompts (output step).

With regard to the input step, training images are reproduced when they are ingested by Stable Diffusion so that latent representations can be created. And presumably, those copies are retained by the system to permit it to assess the efficacy of the model. If reproductions are in fact made at this stage, the copying would likely qualify as an act of infringement. But the details surrounding the copies, such as what they look like and where and how long they are stored, remain a mystery. These details will likely come to light during discovery (assuming the lawsuits proceed past the pleading stage).

With regard to the training step, Getty alleges that intermediate copies of the training images are made en route to the AI generating an output image. For example, training images are encoded and compressed (converted to latent representations) and modified (by having noise added to the latent representations), and these intermediate copies are then saved. According to Getty, these copies qualify as infringing derivative works of the originals.

As a general matter, intermediate copying of a work can give rise to liability even when the copies are only ever used by a computer, and regardless of whether the computer’s output infringes.15 Getty might argue that the latent representations are nothing more than translated versions of the underlying images (much like a book translated into another language), and that converting an image from one form to another constitutes infringement. But the scenario here is arguably different from typical cases in which a work is transformed from one language or medium to another. Unlike in those cases, Stable Diffusion’s latent representations do not convey (in a perceptible sense, at least) the artistic expression of the underlying image itself.16

Further, to qualify as an infringing derivative work, a work must be substantially similar to the original.17 It is unclear whether Stable Diffusion’s latent representations would be considered substantially similar to the training images on which they are based. And a diffused latent representation with added noise would be even less similar—and, in fact, intentionally dissimilar—to its corresponding training image. Thus, the plaintiffs might have a difficult time showing that Stable Diffusion’s intermediate copies infringe. As a practical matter, though, this theory of infringement probably only matters if the plaintiffs are unable to show that the input or output images themselves infringe.

Finally, with regard to the output step, the plaintiffs allege that Stable Diffusion generates images that infringe the copyrighted training images. This is a viable argument in theory. But it is not enough that an output image merely “derives from”—in the ordinary sense of the phrase—a training image. Rather, as explained above, the alleged derivative must be analyzed to determine whether it is substantially similar to the training image. As the defendants point out, the plaintiff artists have not performed such an analysis on any particular image. Instead, they argue, as a general matter, that all output images infringe.18 Getty, for its part, also has not performed such an analysis. It alleges only that its entire “database” of copyrighted images is infringed.19

In most cases, it seems that an AI-generated image will not be substantially similar to any particular image on which it is trained. This is because, as explained above, Stable Diffusion is designed not to place undue weight on any one image during its training. And so any reproduction of a given training image is likely to be legally insignificant.20 Indeed, the plaintiff artists acknowledge that “none of the Stable Diffusion output images provided in response to a particular Text Prompt is likely to be a close match for any specific image in the training data.”21 Stability AI (and its co-defendants) argue that this concession tanks the plaintiff artist’s infringement claim.

However, users can force the AI to generate images bearing a resemblance to certain artists’ copyrighted works by asking the AI to produce the image “in the style of ” such artists and works. And “style is one ingredient” of protectable expression.22 Thus, it is possible that an output image “in the style” of a particular work could be found to infringe the copyright in that work. But AI-generated images “in the style” of an artist (as opposed to a particular work) are probably unlikely to infringe because broad genres of artistic styles are not protectable.23 And so, for example, it is doubtful that an image of a dog “in the style of” Picasso would be found to infringe a Picasso painting.24

Notably, this “in the style of” infringement theory raises questions about what role end users should play in the infringement analysis. After all, it is the users’ prompts that condition the AI to produce potentially infringing output images in the first place. Arguably, an indirect infringement claim against Stability AI for its role in facilitating infringement by end users would be viable. But even then, Stable Diffusion has substantial noninfringing uses that would likely negate such a claim.25

On balance, it seems that the plaintiffs’ best infringement argument is for any images that are reproduced during the input step, as well as images generated during the output step that are substantially similar to the plaintiffs’ particular, identified training images.

Is Stable Diffusion’s Use of Copyrighted Images Fair Use?

Even assuming that Stable Diffusion infringes the plaintiffs’ copyrights, the question remains whether the doctrine of fair use excuses such infringement. As explained above, a court would analyze several factors to answer that question.

The Purpose and Character of the Use

The first fair-use factor is “the purpose and character of the use, including whether such use is of a commercial nature.”26 The key inquiry is whether the infringing work is “transformative”; that is, whether it “merely supersede[s] the objects of the original creation, or instead adds something new, with a further purpose or different character, altering the first with new expression, meaning, or message.”27 In Warhol, the Supreme Court clarified that the transformativeness inquiry should focus on “the problem of substitution”; that is, whether the infringing work supersedes the objects of, or supplants, the original.28 Accordingly, “[i]f an original work and a secondary use share the same or highly similar purposes, and the secondary use is of a commercial nature, the first factor is likely to weigh against fair use, absent some other justification for copying.”29

As to the input and intermediate steps, there are compelling arguments to be made on both sides of the fair-use issue, and the answer could depend on the precise manner in which a user utilizes the system. On the one hand, copies made by Stable Diffusion arguably serve a different purpose than the original training images, at least in some (or most) cases. Whereas the original images are designed for their aesthetic value and meant for public consumption, copies made during the input and training steps are designed solely to train the AI and are only ever “seen” by a computer.30

This type of copying is similar to utilitarian forms of copying that courts have held to be transformative. For example, in a pair of cases involving Google Books, the Second Circuit held that scanning and digitizing books to make them text searchable and to display snippets is transformative because its purpose and effect is to improve access to information rather than to exploit the copied books’ artistic expression.31 Courts have reached similar conclusions in cases involving other technologies in which copyrighted works are ingested into a database to facilitate a utilitarian purpose that is tangential to the aesthetic purpose for which the works were created.32 Also relevant are cases holding that making intermediate copies of software in order to reverse engineer the software for lawful purposes is sufficiently transformative.33 Disassembling software to understand its functional requirements for compatibility with a competitor’s hardware requires copying only nonprotectable elements and is necessary for creating competing software. In effect, the copying gives rise to “a new system created for new products.”34

These cases are informative here. As explained above, Stable Diffusion’s input and intermediate copying facilitates a purpose that is arguably different than the purpose for which the training images were originally created. In a sense, Stable Diffusion is more transformative than the technology at issue in the Google Books cases because no portion of the training images are ever shown to end users (unlike the Google Books snippets). Likewise, Stable Diffusion’s copying arguably involves a form of reverse engineering; that is, converting images into mathematical representations and then using those representations to understand the images’ attributes for downstream applications. In a sense, the copying is used to “teach” the AI, and teaching is generally considered a form of fair use, at least in some circumstances.35

On the other hand, there are important differences. For example, in the Google Books cases, the infringing snippets did not substitute for the underlying books. Quite the opposite—the snippets attracted attention to the books. Here, by contrast, Stable Diffusion uses the input and intermediate copies to create images that could compete with the originals, thus indirectly exploiting the originals’ artistic expression. And, even though the same was true in the reverse-engineering cases, the copying in those cases was necessary for the narrow purpose of facilitating interoperability of software.36 Here, by contrast, Stable Diffusion’s intermediate copying is more broadly used to imitate copied images that, as a general matter, include many more protectable elements than software. In that sense, Stable Diffusion arguably “merely recontextualiz[es] the original expression,” which is generally “not transformative.”37 Further, in Warhol, the Supreme Court dismissed the “value of copying” as a basis for finding that an infringing use qualifies as fair use, which may call into question certain forms of utilitarian copying that previously would have been found to qualify.38 These nuances could plausibly lead a court to find that Stable Diffusion’s input and intermediate copying is not sufficiently transformative after all.

Still further, a court could find that Stable Diffusion’s copies ultimately serve the same commercial purpose as the originals. Stability AI is itself a for-profit company, and even though it offers Stable Diffusion free of charge,39 its training images are used for a commercial purpose. For example, Getty alleges that it has “licensed millions of suitable digital assets for a variety of purposes related to artificial intelligence and machine learning,” and has announced a partnership with other generative AI companies to license its images.40 Getty would seem to have a strong argument that Stable Diffusion’s input and intermediate copies supersede the objects of, or supplants, Getty’s original images, which would seem to weigh against a finding of transformativeness under Warhol.

As to the output step, again, there are compelling arguments going both ways. At first blush, it would seem that any copying that Stable Diffusion performs is arguably transformative. Generally, an infringing work is more likely to be considered transformative if it “draw[s] from numerous sources, rather than . . . simply alter[s] or recast[s] a single work with a new aesthetic.”41 That is what Stable Diffusion does. Rather than reconstructing a single training image, the AI draws upon billions of such images to create new ones from scratch. In fact, repeating a single text prompt will lead to different outputs each time.42

But the plaintiffs would seem to have a strong argument under Warhol that Stable Diffusion’s output images serve as commercial substitutes for the originals. In particular, Stable Diffusion allows end users to generate customized images in the style of copyrighted works for free, thereby potentially supplanting the market for those original works. For example, a college student strapped for cash but wishing to own a Warhol might settle for a free AI-generated image in the same style to use in a digital art frame. Or a user might elect to use a highly customized AI-generated image that suits her specific needs rather than pay for a generic stock photo. If those output images are substantially similar to the original Warhol or generic stock image, the infringing uses would unlikely qualify as fair use.

The Nature of the Copyrighted Work

The second factor is “the nature of the copyrighted work,” which recognizes that creative works are “closer to the core of intended copyright protection than more fact-based works” and are therefore subject to greater protection.43 Unpublished works are likewise subject to greater protection.44 Here, the training images are works of art and photographs that have been previously published on the web. Notwithstanding that the images are published, this factor would likely cut against a finding of fair use because works of art and photographs, even if documentary in nature, are typically found to exhibit creativity and are thus subject to greater protection.

The Amount and Sustainability of the Portion Used

The third factor asks whether “the amount and substantiality of the portion used in relation to the copyrighted work as a whole” are reasonable in relation to the purpose of the copying.45 The more that is copied—and when the copied content is the “heart” of the original work—the less likely the infringing use will qualify as fair use, unless what is copied is necessary to effectuate a transformative purpose.46 Here, the training images are copied in their entirety to create the latent representations, which are then used to train the AI and create the output images. Presumably, there is no way to adequately train the AI without copying the entirety of the training images. Accordingly, to the extent that the input and training copying is found to be transformative, this factor weighs in favor of finding fair use, but perhaps only slightly. With regard to the output step, as explained above, at least in most cases, that AI does not copy entire training images to generate its output images, which likewise favors fair use.

The Effect of the Use upon the Potential Market for or Value of the Copyrighted Work

The final factor is “the effect of the use upon the potential market for or value of the copyrighted work,” which requires courts to consider “not only the extent of market harm caused by the particular actions of the alleged infringer, but also whether unrestricted and widespread conduct of the sort engaged in by the defendant would result in a substantially adverse impact on the potential market for the original.”47 The more likely and significant the harm, the less likely the infringing use qualifies as fair use.

As to the input and intermediate steps, end users never see the copies of the training images, and so the copies are unlikely to affect the market for or value of the training images themselves. Further, the intermediate copies comprise latent representations and low-resolution, diffused versions of the images. Accordingly, the copies are unlikely to ever serve as a significant market replacement for the plaintiff ’s high-quality images.48 However, as explained above, Getty alleges that it has licensed its images for use with generative-AI platforms. Thus, Getty would seem to have a strong argument that Stable Diffusion’s input and intermediate steps significantly harms this market.

As for the output step, as also explained above, at least in some instances, users can generate free output images that are substantially similar to the originals, thereby supplanting the market for those originals. This would counsel against a finding of fair use.

Stay Tuned for Developments in the Law

It seems that at least some of what Stable Diffusion does nomi-nally qualifies as copyright infringement. But is it fair use? The technologically paradigm-shifting nature of AI makes this question difficult to answer, at least when applying current copyright law doctrine. Of course, throughout history, copyright law has had to evolve in order to adapt to technological changes. In such circum-stances, the law “must be construed in light of [its] basic purpose,” which is to promote creativity.49

With that guiding principle in mind, at least before Warhol, the case for fair use might have been very strong. The law should arguably grant AI technology a certain amount of breathing room to encourage creativity in this new technological space. A ruling that such technology is unlawful could stifle creative expression in the AI context. It could also threaten creativity more broadly, as it would call into question the legality of artists who create their own works by borrowing or incorporating elements from other artists’ works. After all, “artists don’t create all on their own; they cannot do what they do without borrowing from or otherwise making use of the work of others.”50 Stated differently, “[n]othing comes from nothing[.]”51

But after Warhol, the answer might be different. In fact, the Court rejected the notion that fair use should apply broadly to copying that is deemed socially valuable and dismissed the contention that the Court’s holding would stifle creativity. The Court instead focused on incentivizing creativity through strong protections for copyright holders. As shown above, the Court’s ruling could cut against a finding of fair use, at least with respect to Getty, which alleged that it licenses its images to train AI in other contexts. In the end, Stable Diffusion seems to effectively harness the essence of copyrighted images for free. Something about that seems unsettling, if not unfair.

Eventually, Congress will likely have to step in and enact legislation addressing AI technology, as Congress alone “has the constitutional authority and the institutional ability to accommodate fully the varied permutations of competing interests that are inevitably implicated by such new technology.”52 And, indeed, both the Senate and the House have held hearings addressing these very issues.53 But until Congress acts, these types of policy considerations will have to be addressed by the courts.

Meanwhile, Stability AI recently announced that in the next release of Stable Diffusion, it will allow artists to opt out of having their works used in the training set.54 This measure will likely moot at least some of the copyright issues going forward. Nonetheless, the Stable Diffusion litigation is just the tip of the iceberg. Many other companies offer competing AI art generators that could also be implicated, as could other forms of generative AI, including chatbots, voice-synthesis tools, and music- and code-generation tools.55 Indeed, analogous lawsuits involving such technology have already begun to spring up.56

Finally, the future of generative-AI tech could depend on where the tech is offered. For example, Getty also sued Stability AI in the United Kingdom, which has a “fair dealing” doctrine that is generally considered narrower than its American “fair use” cousin.

And so it is possible that Stable Diffusion could be legal in the United States but not in the United Kingdom. On the other end the spectrum, Japan recently announced that it will not enforce copyrights on works used to train AI.57 It remains to be seen whether this announcement impacts how (and where) artists and AI tech companies choose to create and innovate.

Notes

- Andersen v. Stability AI Ltd., No. 3:23-cv-00201 (N.D. Cal. filed Jan. 13, 2023); Getty Images (U.S.), Inc. v. Stability AI, Ltd., No. 1:23-cv-00135 (D. Del. filed Feb. 3, 2023).

- Stable Diffusion Online, https://stablediffusionweb.com/.

- Andy Warhol Found. for the Visual Arts, Inc. v. Goldsmith, 143 S. Ct. 1258 (2023).

- Artists’ Compl. ¶ 4, 24, 65, 90.

- The description that follows derives from the complaints and other sources.

- This technique, which models the diffusion process in physics, serves as the technology’s namesake.

- Nicholas Carlini et al., Extracting Training Data from Diffusion Models (Jan. 30, 2023), https://arxiv.org/ abs/2301.13188.

- In the original release, Stable Diffusion’s “in the style of” feature was apparently quite effective at generating images that looked like the originals. But Stability AI limited the efficacy of the feature in a subsequent release. Steve Dent, Stable Diffusion Update Removes Ability to Copy Artist Styles or Make NSFW Works, Engadget (Nov. 25, 2022), https://www.engadget.com/stable-diffusion-version-2-update-artist-styles-nsfw-work-124513511.html.

- U.S. Const. art. I, § 8, cl. 8; Fogerty v. Fantasy, Inc., 510 U.S. 517, 527 (1994).

- Kirtsaeng v. John Wiley & Sons, Inc., 579 U.S. 197, 204 (2016) (cit-ing Fogerty, 510 U.S. at 526); Warhol, 143 S. Ct. at 1273 (“Copyright thus trades off the benefits of incentives to create against the costs of restrictions on copying.”).

- 17 U.S.C. § 102(b); Golan v. Holder, 565 U.S. 302, 328-29 (2012).

- 17 U.S.C. § 107; Campbell v. Acuff-Rose Music, Inc., 510 U.S. 569, 575-79 (1994).

- 17 U.S.C. § 107.

- Sega Enters. Ltd. v. Accolade, Inc., 977 F.2d 1510, 1527 (9th Cir. 1992) (quoting Sony Corp. of Am. v. Universal City Studios, Inc., 464 U.S. 417, 432 (1984)); see also Campbell, 510 U.S. at 577-78.

- See, e.g., Sega, 977 F.2d at 1518-19 (“[T]he Copyright Act does not distinguish between unauthorized copies of a copyrighted work on the basis of what stage of the alleged infringer’s work the unauthorized copies represent.”).

- CF. Perfect 10, Inc. v. Amazon.com, Inc., 508 F.3d 1146, 1161 (9th Cir. 2007) (HTML instructions directing a user’s browser to a website publisher’s computer that stores a full-size image are “not equivalent to showing a copy” of the image).

- See Litchfield v. Spielberg, 736 F.2d 1352, 1357 (9th Cir. 1984) (“A work will be considered a derivative work only if it would be considered an infringing work if the material which it has derived from a prior work had been taken without the consent of a copyright proprietor of such prior work.” (cleaned up)); see also Tanksley v. Daniels, 902 F.3d 165, 174 (3d Cir. 2018) (to infringe, a work must be “substantially similar” to the underlying work, which requires a “side-by-side comparison of the works” to determine “whether a lay-observer would believe that the copying was of protectible aspects of the copyrighted work” (cleaned up)).

- Artists’ Case, Stability AI Am. MTD at 6-7; DeviantArt MTD at 11-12; Midjourney MTD at 9-11. As the defendants also point out, the plaintiff artists have failed to allege that each plaintiff ’s works were registered for copyright protection before filing suit. Stability AI Am. MTD at 4-6; DeviantArt MTD at 9-10; Midjourney MTD at 7-8.

- See, e.g., Getty Am. Compl. ¶¶ 28, 38, 76.

- Bell v. Wilmott Storage Servs., LLC, 12 F.4th 1065, 1074 (9th Cir. 2021) (“[I]f the degree of copying is merely de minimis, then it is non-actionable.”).

- Artists’ Compl. ¶ 93.

- Steinberg v. Columbia Pictures Indus., Inc., 663 F. Supp. 706, 712 (S.D.N.Y. 1987) (granting summary judgment of infringement where defendant’s movie poster copied plaintiff ’s “sketchy, whimsical style” and included similar colors, techniques, vantage point, and subject matter depicted in plaintiff ’s cartoon).

- See, e.g., Williams v. 3DExport, 2020 WL 532418, at *3 (E.D. Mich. Feb. 3, 2020) (noting with regard to anime art style that one “could only have a protectible copyright interest in his specific expression of that idea; he could not lay claim to all anime that ever was or will be produced”); Trek Leasing, Inc. v. United States, 66 Fed. Cl. 8, 13 (2005) (“Plaintiff ’s idea to use the BIA Pueblo Revival style in constructing the Fort Defiance Post Office is not, in itself, protectable. Nevertheless, certain portions of the BIA Pueblo Revival style can be protectable, since the style is susceptible to multiple forms of expression.” (citations omitted)).

- See Grossman Designs, Inc. v. Bortin, 347 F. Supp. 1150, 1156-57 (N.D. Ill. 1972) (“Picasso may be entitled to a copyright on his portrait of three women painted in his Cubist motif. Any artist, however, may paint a picture of any subject in the Cubist motif, including a portrait of three women, and not violate Picasso’s copyright so long as the second artist does not substantially copy Picasso’s specific expression of his idea.”).

- See Sony, 464 U.S. at 442-56 (finding Sony’s Betamax technology capable of substantial noninfringing uses, which negated contributory infringement claim).

- 17 U.S.C. § 107(1).

- Campbell, 510 U.S. at 579 (cleaned up).

- Warhol, 143 S. Ct. at 1274.

- Id. at 1277; see also id. at 1276 (“[T]he fact that a use is commercial as opposed to nonprofit is an additional element of the first factor.” (cleaned up)).

- Authors Guild v. Google, Inc., 804 F.3d 202, 221-22 (2d Cir. 2015)(“While Google makes an unauthorized digital copy of the entire book, it does not reveal that digital copy to the public. The copy is made to enable the search functions to reveal limited, important information about the books.”).

- Id.; Authors Guild, Inc. v. HathiTrust, 755 F.3d 87, 97 (2d Cir. 2014).

- See, e.g., Kelly v. Arriba Soft Corp., 336 F.3d 811 (9th Cir. 2003) (providing thumbnail-image search results is transformative); Perfect 10, 508 F.3d 1146 (same); see also AV ex rel. Vanderhye v. iParadigms, LLC, 562 F. 3d 630 (4th Cir. 2009) (storing copies of essays for detecting plagiarism is transformative). But see Hachette Book Grp., Inc. v. Internet Archive, ___ F. Supp. 3d ___, 2023 WL 2623787 (S.D.N.Y. Mar. 24, 2023) (recasting print book into ebook for digital library is not transformative).

- See Google LLC v. Oracle Am., Inc., 141 S. Ct. 1183, 1209 (2021) (copying of Java SE API to make compatible software found to be fair use); Sega, 977 F.2d at 1522-23 (disassembling source code to study functionality in order to make compatible video games found to be fair use); see also Sony Comput. Entm’t, Inc. v. Connectix Corp., 203 F.3d 596, 602-08 (9th Cir. 2000) (“Connectix’s intermediate copying and use of Sony’s copyrighted BIOS was a fair use for the purpose of gaining access to the unprotected elements of Sony’s software.”); Bateman v. Mnemonics, Inc., 79 F.3d 1532, 1540 n.18 (11th Cir. 1996) (adopting Sega’s application of fair-use doctrine to reverse-engineering activities seeking to gain access to nonprotectable ideas and functional elements).

- Warhol, 143 S. Ct. at 1277 n.8 (citing Google, 141 S. Ct. at 1203).

- See 17 U.S.C. § 107.

- Sega, 977 F.2d at 1522 (“[A]lthough Accolade’s ultimate purpose was the release of Genesis-compatible games for sale, its direct purpose in copying Sega’s code, and thus its direct use of the copyrighted material, was simply to study the functional requirements for Genesis compatibility so that it could modify existing games and make them usable with the Genesis console.”).

- Dr. Seuss Enters., L.P. v. ComicMix LLC, 983 F.3d 443, 454 (9th Cir. 2020).

- Warhol, 143 S. Ct. at 1287-88.

- Though, Stability AI also offers a paid version of its technology (called DreamStudio).

- Getty Am. Compl. ¶ 66; Dawn Chmielewski & Stephen Nellis, Adobe, Nvidia AI Imagery Systems Aim to Resolve Copyright Questions, Reuters (Mar. 21, 2023), https://www.reuters.com/technology/adobe-nvidia-ai-imagery-systems-aim-resolve-copyright-questions-2023-03-21.

- Andy Warhol Found. for Visual Arts, Inc. v. Goldsmith, 11 F.4th 26, 41 (2d Cir. 2021), aff ’d, No. 21-869.

- Further, even if Stable diffusion were no more than a “collaging tool,” as the plaintiff artists allege, that fact would not necessarily negate a fair-use defense, as courts have found photograph collages to be transformative in certain circumstances. See, e.g., Cariou v. Prince, 714 F.3d 706, 706 (2d Cir. 2013) (incorporation of “serene and deliberately composed portraits and landscape photographs” into a “crude and jarring” collage was sufficiently transformative because it created “new information, new aesthetics, new insights and understandings”); Blanch v. Koons, 467 F.3d 244, 253 (2d Cir. 2006) (incorporating a copyrighted photograph into a painting was transfor-mative because the photograph was juxtaposed alongside other images with changes to color, the background, the medium, and the size of objects so as to create a different form of art that comments “on the social and aesthetic consequences of mass media”).

- 17 U.S.C. § 107(2); Campbell, 510 U.S. at 586.

- Harper & Row Publishers, Inc. v. Nation Enters., 471 U.S. 539, 562-63 (1985).

- 17 U.S.C. § 107(3); Campbell, 510 U.S. at 586 (cleaned up).

- See Kelly, 336 F.3d at 820-21 (“If the secondary user only copies as much as is necessary for his or her intended use, then this factor will not weigh against him or her[.] . . . [A]lthough Arriba did copy each of Kelly’s images as a whole, it was reasonable to do so in light of Arriba’s use of the images.”).

- 17 U.S.C. § 107(4); Campbell, 510 U.S. at 590 (cleaned up).

- See Kelly, 336 F.3d at 821-22 (“The thumbnails would not be a substitute for the full-sized images because the thumbnails lose their clarity when enlarged. . . . Anyone who downloaded the thumbnails would not be successful selling full-sized images enlarged from the thumbnails because of the low resolution of the thumbnails.”); see also id. at 821 n.37 (noting that, at least for photographic images, “the quality of the reproduction may matter more than in other fields of creative endeavor” because “[t] he appearance of photographic images accounts for virtually their entire aesthetic value”).

- Sega, 977 F.2d at 1527.

- Warhol, 143 S. Ct. at 1293 (Kagan, J., dissenting).

- Id. at 1297 (cleaned up).

- Sony, 464 U.S. at 431 (“Sound policy, as well as history, supports our consistent deference to Congress when major technological innovations alter the market for copyrighted materials.”).

- Oversight of A.I.: Rules for Artificial Intelligence, S. Comm. on the Judiciary, Subcomm. on Privacy, Tech., and the Law (May 16, 2023), https://www.judiciary.senate.gov/committee-activity/hearings/oversight-of-ai-rules-for-artificial-intelligence; Artificial Intelligence and Intel-lectual Property: Part I—Interoperability of AI and Copyright Law, H.R. Judiciary Comm., Subcomm. on Courts, Intell. Prop., and the Internet (May 17, 2023), https://judiciary.house.gov/committee-activity/hearings/artificial-intelligence-and-intellectual-property-part-i.

- Benj Edwards, Stability AI Plans to Let Artists Opt Out of Stable Diffu-sion 3 Image Training, Ars Technica (Dec. 15, 2022), https://arstechnica.com/information-technology/2022/12/stability-ai-plans-to-let-artists-opt-out-of- stable-diffusion-3-image-training/.

- See, e.g., Doe 1 v. GitHub, Inc., ___ F. Supp. 3d ___, 2023 WL 3449131, at *2 (N.D. Cal. May 11, 2023) (DMCA lawsuit involving “AI-based program that can assist software coders by providing or filling in blocks of code using AI” (cleaned up)).

- See Wes Davis, Sarah Silverman Is Suing OpenAI and Meta for Copyright Infringement, The Verge (July 9, 2023), https://www.theverge. com/2023/7/9/23788741/sarah-silverman-openai-meta-chatgpt-llama-copyright-infringement-chatbots-artificial-intelligence-ai.

- See Delose Prime, Japan Goes All In: Copyright Doesn’t Apply to AI Training, Technomancers.ai (May 30, 2023), https%3A%2F%2Ftechnomancers .ai%2Fjapan-goes-all-in-copyright-doesnt-apply-to-ai-training%2F.

Receive insights from the most respected practitioners of IP law, straight to your inbox.

Subscribe for Updates